ACAnalysis testing

Except for the rather complex Procyon test, until now there was no straightforward way to measure the AIPC performance. MLCommons and MLPerf Client have solved that problem, especially with the GUI-powered 1.0 version that was released just days ago.

Created by MLCommons and with participation from hardware vendors including AMD, Intel Corporation and Qualcomm to name a few, MLPerf Client is a popular benchmark for testing AI PC performance across large language models (LLMs).

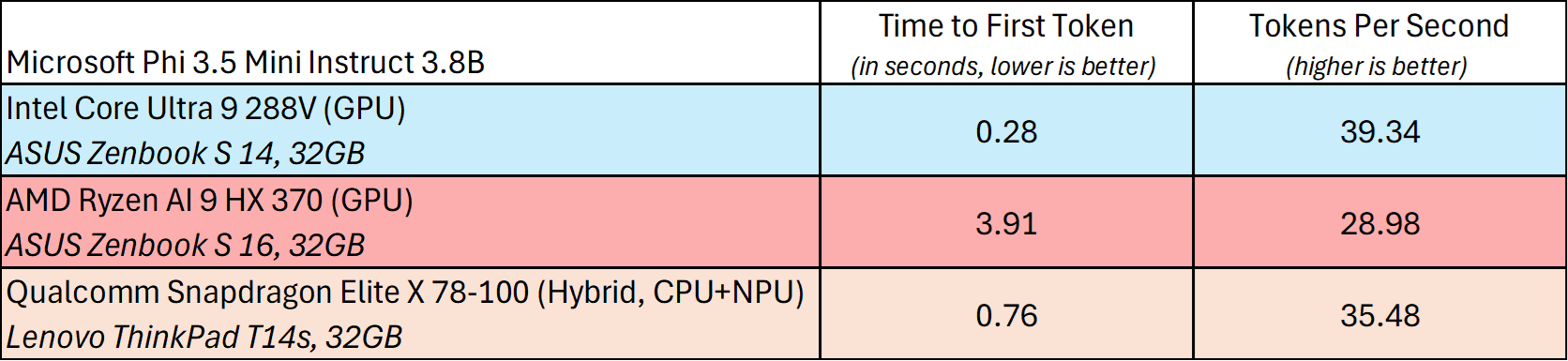

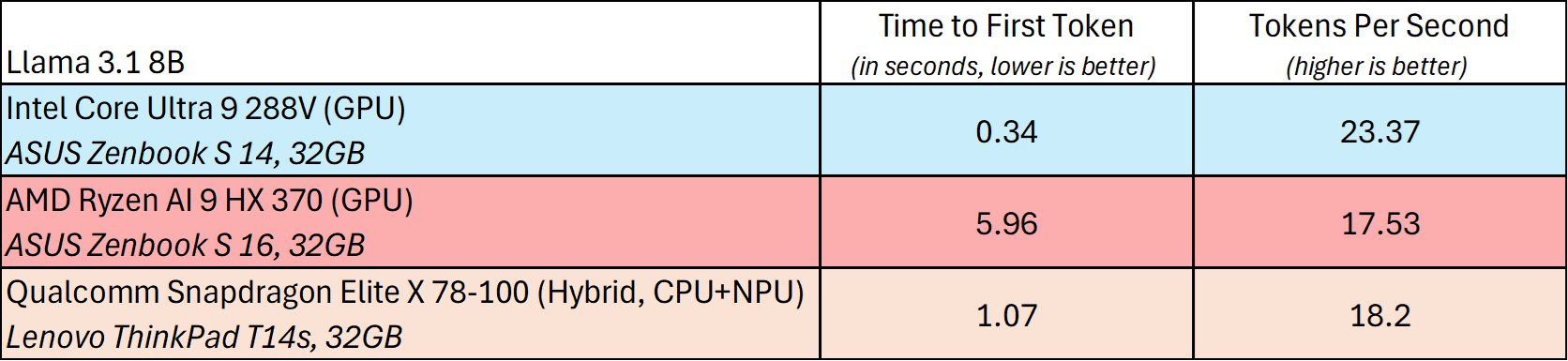

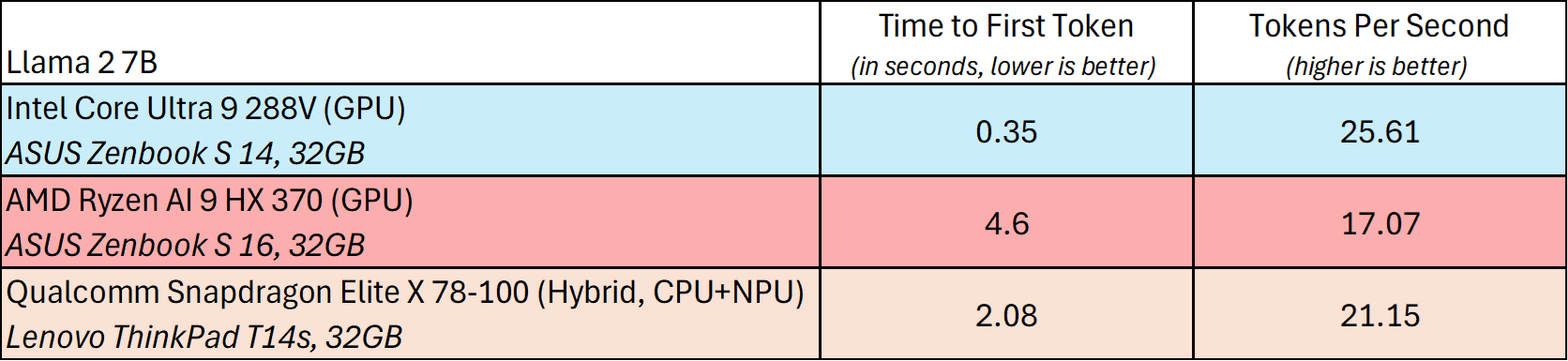

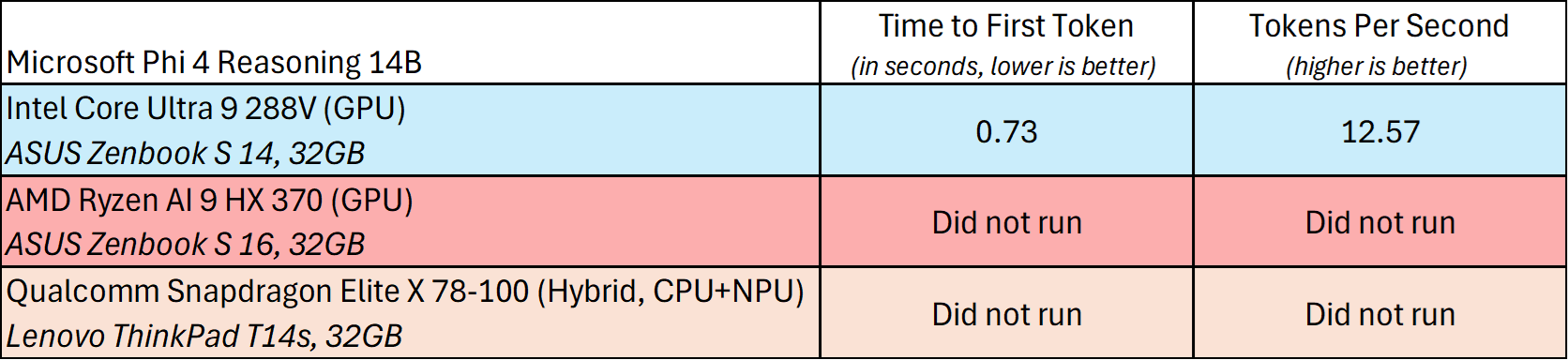

New to v1.0 are more popular AI models, including Llama2 7B, Llama 3.17B, Microsoft Phi 3.5 Mini 3.8B, and Microsoft Phi4 Reasoning 14B. Also new to v1.0 is a new GUI option, and since MLPerf Client is free to download and open source, it should be easy for end-users to try out.

ACAnalysis tested the latest benchmark with comparable hardware across all three vendors using their own preferred run-times. We found that Intel led the numbers with strong GPU performance from their Intel Arc Graphics across both key metrics, time-to-first token, and tokens/s per second. Meanwhile, Qualcomm is in second place with their NPU results, while AMD is at a distant third.

MLPerf Client v1.0 also adds optional support for testing Microsoft’s Phi 4 Reasoning 14B model. In ACAnalysis testing, we found that Intel was the only hardware vendor to support such.

MLPerf Client v1.0 also adds additional support for Microsoft’s recently announced API called WinML. Powered by ORT, WinML is Microsoft’s effort to democratize AI inferencing on Windows OS, by having a consistent layer for all hardware developers to use. In our testing, we found that Intel again was the only vendor to support WinML MLPerf Client v1.0.

Our results demonstrate that not only does Intel lead in performance with their Intel Core Ultra processors, but they appear to be leading in compatibility with greater LLM and runtime support. This is important as consumers want to know their AI PCs can not only run fast, but can deliver solid AI experiences, out-of-box.